Working with Cloud Storage (s3). Golang vs Rust vs Python. Who shall emerge victorious?

I’ve always been a firm believer in using the right tool for the job. Sometimes I look at a piece of code … and ask … why? I mean just because you can do something doesn’t mean that you should. I see a lot of my job as someone who writes code … as not just my ability to write code, but the ability to reason about problems and design simple and elegant solutions that solve the problem at hand.

I try not to let my love of a tool, language, or package color my view of the world as it is. In fact, there is wisdom to be found in being critical of those languages and tools you love the most. Be aware of their shortcomings and failures. This leads to better software and architecture designs, and less complexity. Too often I’ve seen folks picking their tool of choice and then sticking with it till the bitter end, and it usually is bitter. There is more to life than writing obtuse Scala code that is illegible for some mundane task.

This sort of thing is a blight on everyone and every system. Now I must descend from my high horse and join the peasants on the dusty road of life. Today I want to look at some very common Data Engineering tasks, namely cloud storage, and what it is like to do such a thing with Golang, Rust, and Python. I will let you draw your own conclusions. Maybe. Code available on GitHub.

Simple Things … like s3.

Some things should be simple, like cloud storage. Just when I think I’ve escaped the clutches of having to mess around with more files in s3, it grabs me in its icy clutches. There is probably nothing more quintessential Data Engineers than messing around in s3. No matter how many times I do it, I still manage to do something wrong when messing around with files. Copying files into the wrong spot, unable to find something, deleting something you shouldn’t. It’s all part of life.

I’m going to simply try a simple task in each language, starting with Golang. I have to say, Golang has been my new favorite little pet for a few months now, it’s simply enjoyable and fun to work with. It better not disappoint me. A few things I will be paying attention to.

- SDK support for the language.

- How verbose it is to do a simple task.

- Performance.

- Documentation.

- How enjoyable was the experience.

Golang with s3.

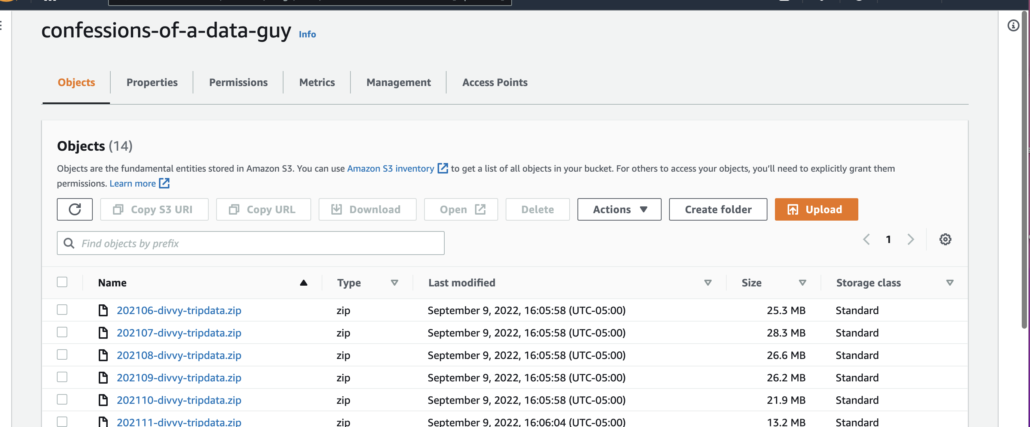

What could be easier than listing objects in a s3 bucket and using a filter or prefix when looking for certain files? Very common indeed. Let’s see what Golang has for us. I have a AWS bucket filled with files, let’s try to get the files from January… aka .. 202201. I have both files from 2021 and 2022, so this will require filtering the results via the SDK’s.

Forgive me for my terrible Golang, or not. The worst is probably yet to come. Luckily Golang does have an official AWS SDK, which always makes life easier.

package main

import (

"fmt"

"os"

"github.com/aws/aws-sdk-go/aws"

"github.com/aws/aws-sdk-go/aws/session"

"github.com/aws/aws-sdk-go/service/s3"

)

func main() {

os.Setenv("AWS_ACCESS_KEY_ID", "")

os.Setenv("AWS_SECRET_ACCESS_KEY", "")

var sess = get_aws_session()

svc := s3.New(sess)

rsp := list_bucket(svc, "202201")

fmt.Println(rsp)

}

func get_aws_session() *session.Session {

sess, err := session.NewSession(&aws.Config{

Region: aws.String("us-east-1")},

)

if err != nil {

fmt.Println(err)

}

return sess

}

func list_bucket(svc *s3.S3, search_string string) *s3.ListObjectsV2Output {

bucket := "confessions-of-a-data-guy"

resp, err := svc.ListObjectsV2(&s3.ListObjectsV2Input{Bucket: aws.String(bucket),

Prefix: aws.String(search_string)})

if err != nil {

fmt.Println(err)

}

return resp

}

Golang didn’t disappoint me. It didn’t feel strange to use the AWS SDK for Golang and listing s3 bucket objects. Setting up a new Session … sess, err := session.NewSession(&aws.Config{ Region: aws.String("us-east-1")}, ) did seem slightly awkward. I guess the filtering of the bucket was the same, but all in all, it seemed concise and not overly verbose.

(base) danielbeach@Daniels-MacBook-Pro gos3 % go run go_with_s3.go

{

Contents: [{

ETag: "\"356a4945f5fdbd8ffe82ddd3c9447eca\"",

Key: "202201-divvy-tripdata.zip",

LastModified: 2022-09-09 21:06:09 +0000 UTC,

Size: 3837661,

StorageClass: "STANDARD"

}],

IsTruncated: false,

KeyCount: 1,

MaxKeys: 1000,

Name: "confessions-of-a-data-guy",

Prefix: "202201"

}

main took 242.045583ms- SDK support for the language. – Yes

- How verbose it is to do a simple task. – Ehh

- Performance. – Fast

- Documentation. – Good

- How enjoyable was the experience. – Decent

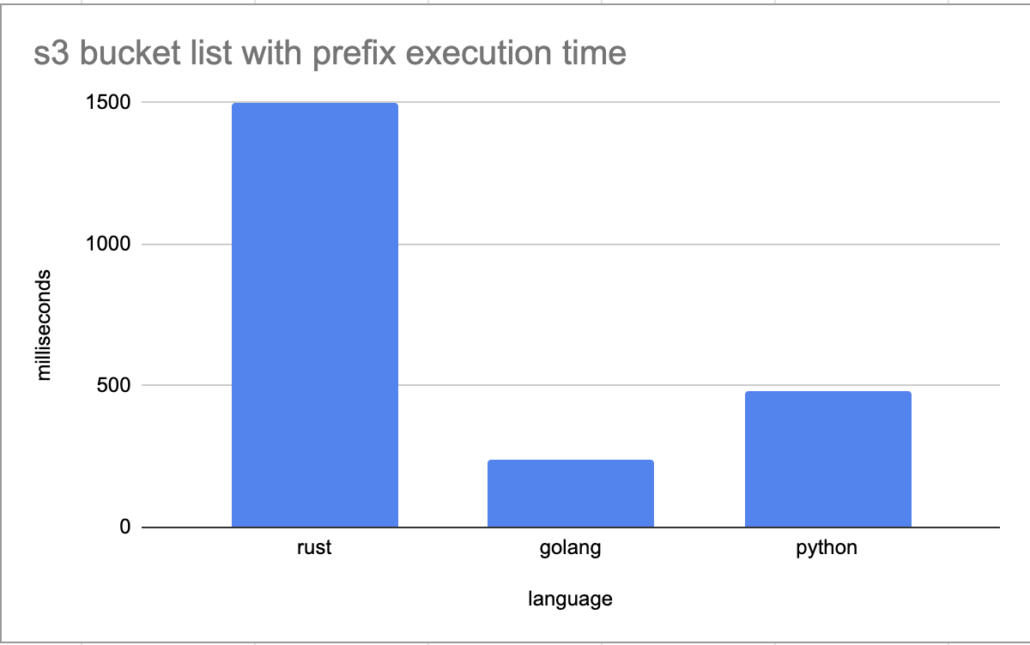

Pretty fast at 242.045583ms, nothing to sniff at. Well, no time to rest, we must be moving along. Next up Rust.

Rust with s3.

Not going to lie, a little scared of this one. Who knows what monstrosity I will produce, a creature of darkness that will scar me no doubt. This is made even more sure by the fact that the official Rust AWS SDK is still in developer preview. Such is life.

Must to my surprise, although the documentation was a little hard to follow … Rust gave me a solid on that on. Simple, straightforward, and dare I say maybe even less verbose than Golang? What a breath of fresh air, maybe there is hope for the world after all.

use aws_sdk_s3 as s3;

use aws_config::meta::region::RegionProviderChain;

use std::env;

use std::time::Instant;

#[tokio::main]

async fn main() -> Result<(), s3::Error> {

let now = Instant::now();

let key = "AWS_ACCESS_KEY_ID";

env::set_var(key, "");

let secret = "AWS_SECRET_ACCESS_KEY";

env::set_var(secret, "");

let region_provider = RegionProviderChain::default_provider().or_else("us-east-1");

let config = aws_config::from_env().region(region_provider).load().await;

let client = s3::Client::new(&config);

let objects = client.list_objects_v2().bucket("confessions-of-a-data-guy").prefix("202201").send().await?;

println!("{:?}", objects);

let elapsed = now.elapsed();

println!("Elapsed: {:.2?}", elapsed);

Ok(())

}

I mean dang, this is impressive for a low-level language like Rust, I was expecting more antics and hoops to jump through I guess. I figured Rust would be the DMV of all the languages I’m trying out here.

ListObjectsV2Output { is_truncated: false, contents: Some([Object { key: Some("202201-divvy-tripdata.zip"), last_modified: Some(Date

Time { seconds: 1662757569, subsecond_nanos: 0 }), e_tag: Some("\"356a4945f5fdbd8ffe82ddd3c9447eca\""), checksum_algorithm: None, si

ze: 3837661, storage_class: Some(Standard), owner: None }]), name: Some("confessions-of-a-data-guy"), prefix: Some("202201"), delimi

ter: None, max_keys: 1000, common_prefixes: None, encoding_type: None, key_count: 1, continuation_token: None, next_continuation_tok

en: None, start_after: None }

Elapsed: 1.55sOne thing I don’t get is why is Rust at a paltry 1.55 seconds way slower than the Golang at 242.045583 ms? Is this a case of async going wrong? Honestly though, the Rust looks and smells a little nicer than Golang, much to my chagrin. So much to do, so little time, let’s move on to Python, my old friend.

- SDK support for the language. – Almost.

- How verbose it is to do a simple task. – Not at all.

- Performance. – Slow

- Documentation. – Ehh

- How enjoyable was the experience. – Very

Python with s3.

We all know this is going to be the easiest up front, don’t we? The thing with Python is that it’s easily the most popular language for Data Engineering, so it’s always wise to compare tasks in other languages to Python. Some part of me says when you are working on “simple” tasks like cloud storage and s3 files you should probably pick the least verbose, easiest-to-understand tool in your pocket.

Yeah, not much to say about this.

import boto3

import os

from datetime import datetime

t1 = datetime.now()

os.environ['AWS_ACCESS_KEY_ID'] = ''

os.environ['AWS_SECRET_ACCESS_KEY'] = ''

s3 = boto3.resource('s3')

bucket = s3.Bucket('confessions-of-a-data-guy')

for obj in bucket.objects.filter(Prefix='202201'):

print(obj.key)

t2 = datetime.now()

print("I took {x}".format(x=t2-t1))

Pretty hard to argue about that one. And faster then Rust, haha, must be an obvious explanation for that one. Either way, It’s hard not to choose the option that is so simple and straight forward.

(base) danielbeach@Daniels-MacBook-Pro pys3 % python3 s3_python.py

202201-divvy-tripdata.zip

I took 0:00:00.479325- SDK support for the language. – Yes-um.

- How verbose it is to do a simple task. – Not at all.

- Performance. – Fast-ish

- Documentation. – Good

- How enjoyable was the experience. – Very

Thoughts

Wasn’t really expecting that at all, but that’s usually how it works. I thought Rust would be the fastest, but it was the slowest, I thought Rust would be the worse to write, but it was easy and one of the best, not far behind Python. Now it’s got me wondering, how would this have looked in Scala.

I wasn’t expecting Golang to be the most verbose and strange one of them all, but that’s ok, it wasn’t too bad, and it was fast. Would it really matter in production? I don’t know. The things that I know that matter when picking a language to do some production work on s3 files.

- Least amount of code. (Less to break, less to manage, less to reason about)

- Speed does matter, sometimes you have millions of files in buckets, and you need to do a lot of work. But in the end, would it really end up mattering? Maybe,

Rustseems annoyingly slow. - I would probably choose

PythonoverGolangbecause it’s just as fast and wayyy less code.

Let me know what you use to work with cloud storage like s3, what do you like and dislike, and why? Code available on GitHub