The Ultimate Data Engineering Chadstack. Running Rust inside Apache Airflow.

![]()

Is there anything more Chad than Apache Airflow … and Rust? I think not you whimp. What two things do I love most? At the moment Rust and Airflow are at least somewhere at the top of that list. I wring my hands sometimes, wishing that things and technologies somehow come together into some bubbling soup and witches concoction from the depths. Then I had a strange thought while laying in bed one night.

What would happen if I ran my Rust inside my Apache Airflow? What would happen? Would the sun go dark? Would SQL Servers everywhere puke up their log files and go to Davey Jones’s locker? Birds fall from the sky? Why hasn’t anyone done this before, why isn’t anyone making this happen in real life?

Chadstack – Airflow + Rust

I’ve been using Airflow since before it was cool. Back when I had to deploy it myself onto a crappy Kubernetes cluster using some terrible Docker images. What a nightmare. But, like the moss growing on the exercise equipment you bought during COVID, I get tired of the same ole’ thing, the same ole’ Airflow, same ole’ Python.

Airflow is terrible at actually processing data. But, that’s probably just Python’s fault, not Airflow’s as much, or is that the same thing? Apache Airflow is the best at orchestration and dependency management, logging, monitoring, and things of that nature. It sucks at plain old data processing. A lot of this has to do with everyone using Pandas to do stuff, even though you don’t want them to. Stinkers.

Give me Rust or give me death!

The ultimate Data Engineering Chadstack.

What if we could combine the power of Airflow with Rust? Well, of course, if we were running some Airflow deployment of our own on Kubernetes, maybe we could bake the Rust runtime into the Docker image for our Airflow worker. But, sadly most people aren’t running this sort of setup and that sounds like not much fun.

Probably most folks are using managed services like GCP Composer, AWS MWAA, or Astronomer. So we need another way to run Rust inside an Apache Airflow worker. Something that took me a while to realize in my life. Complexity is not always born out of genius. Engineers should strive for simplicity whenever and wherever possible.

I think it’s harder to find a simple and elegant solution that has long-term staying power, rather than the shortsighted complexity that leads to pain and suffering.

So how could we quickly and easily combine Airflow and the power of Rust for data processing so that anyone could do it?

- Write Rust to do data processing.

- Package Rust into binary.

- Deploy binary to s3.

- Have Airflow download the binary and process the data.

- Done.

Why is this attractive from a Data Engineering perspective?

- Rust be fast.

- It would allow separate code development repositories (Python vs. Rust).

- It makes for easy CI/CD and automatic deployment.

- Auto-build Rust into binary

- Auto-deploy binary to s3

Putting theory into practice.

All code on GitHub.

The question still remains. Can we make this Frankenstein data pipeline a reality? Never say never. First, we need to come up with a simple Data Engineering problem that Rust could solve much faster than Python. No problem, let’s reach back into the distance past for one … circa February 2023 … a lifetime ago.

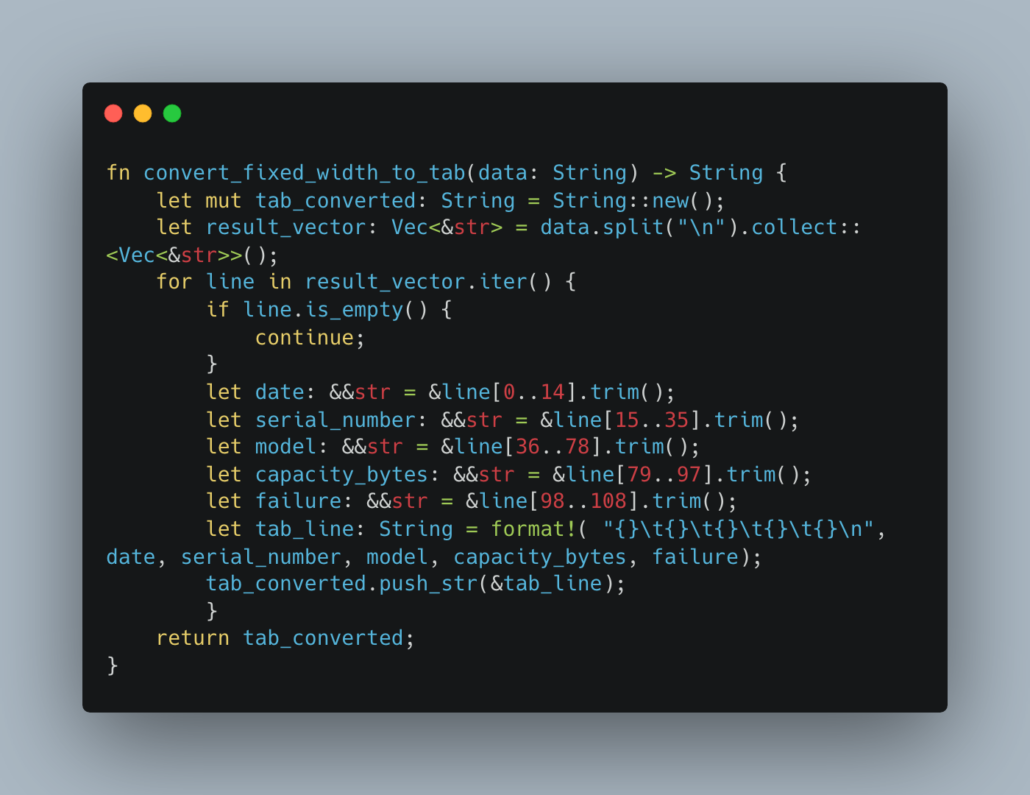

At that time I wrote a comparison of Rust vs Python inside an AWS lambda doing fixed-width to tab flatfile conversion. Rust was %60 faster and used less memory. This is perfect for our project.

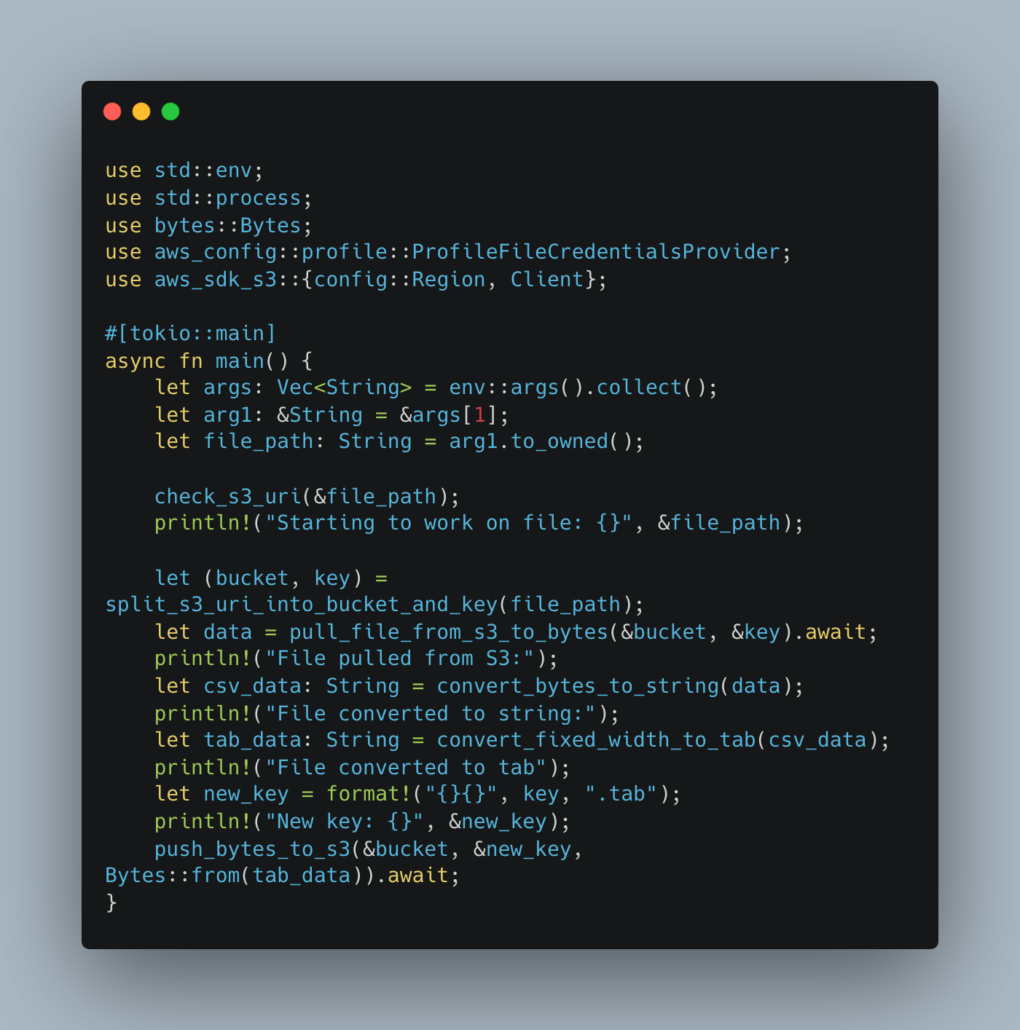

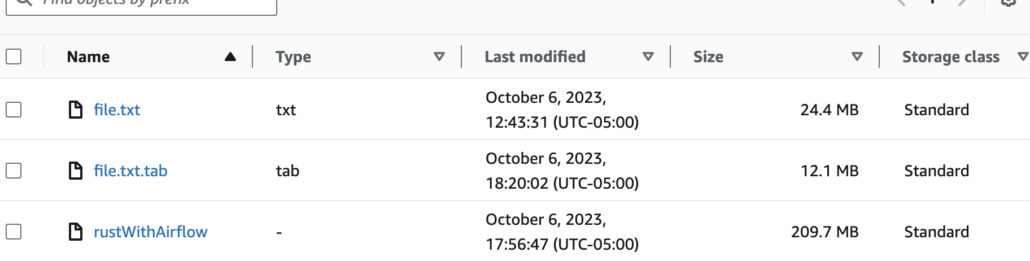

We could have an Airflow worker that triggers on some file being created in s3, and basically, we hand that s3 file URI to a Rust program that converts the fixed-width file to tab-delimited, and deposits said file back into s3.

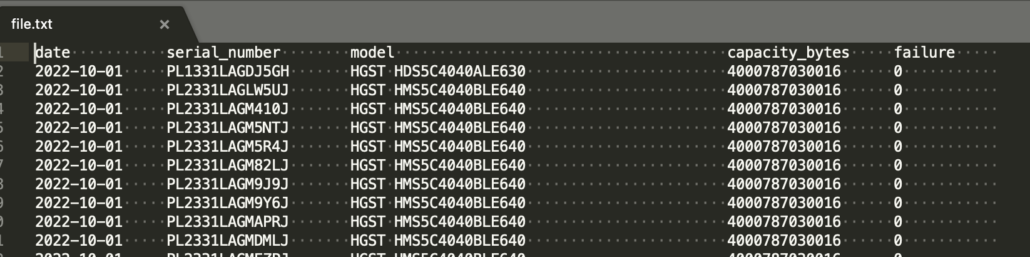

Here is what our raw fixed-with data looks like.

Stored in s3.

So what we want to happen in general with our Airflow + Rust setup is that we have a DAG or some sort or another that gets triggered, is able to retrieve our Rust binary from s3, then execute that binary against a s3 file URI, at which point the Rust should do the heavy lifting.

Now before you yell and scream, like the bunch of rapscallions you are, about this workflow … just humor me. Baby steps my friend. Of course, we would have some more ingenious way to get our Rust code or binary onto our Airflow worker for a long-term production use case.

But, talk about being able to do the heavy lifting on an Airflow Worker … that’s what Rust is for baby.

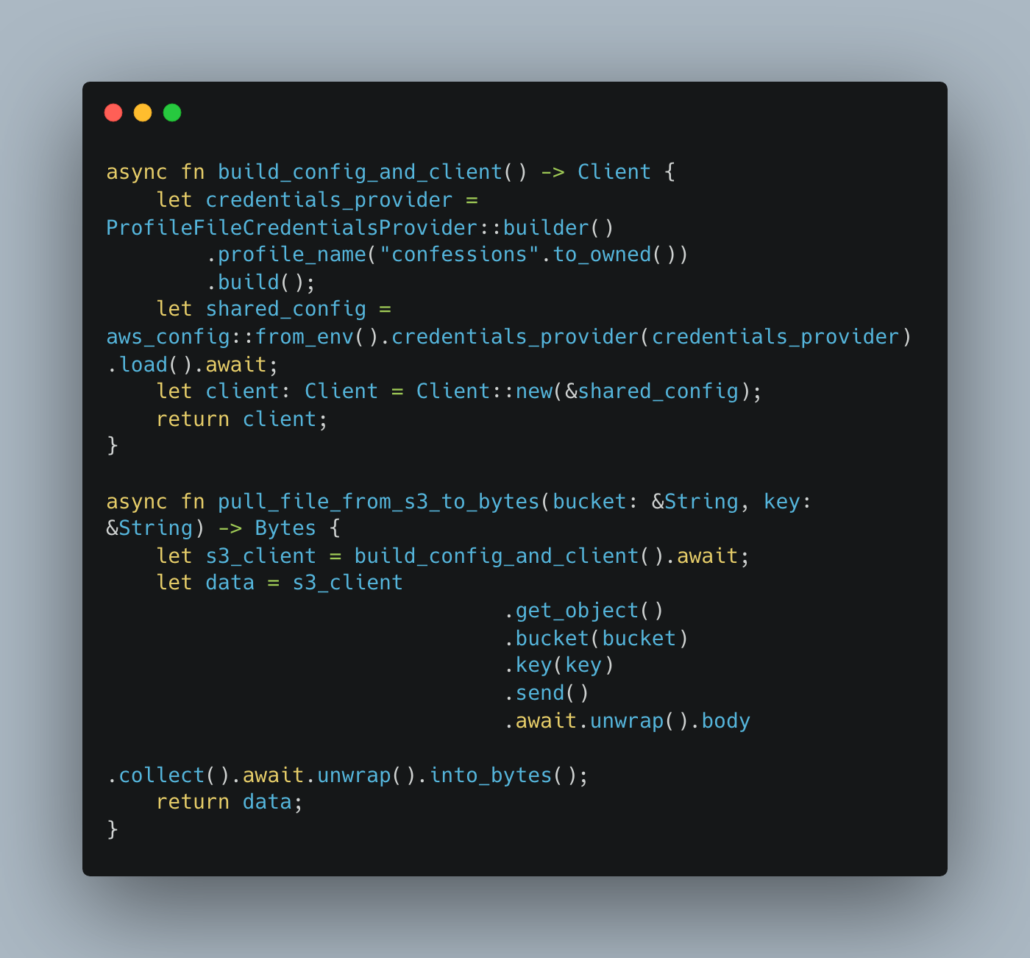

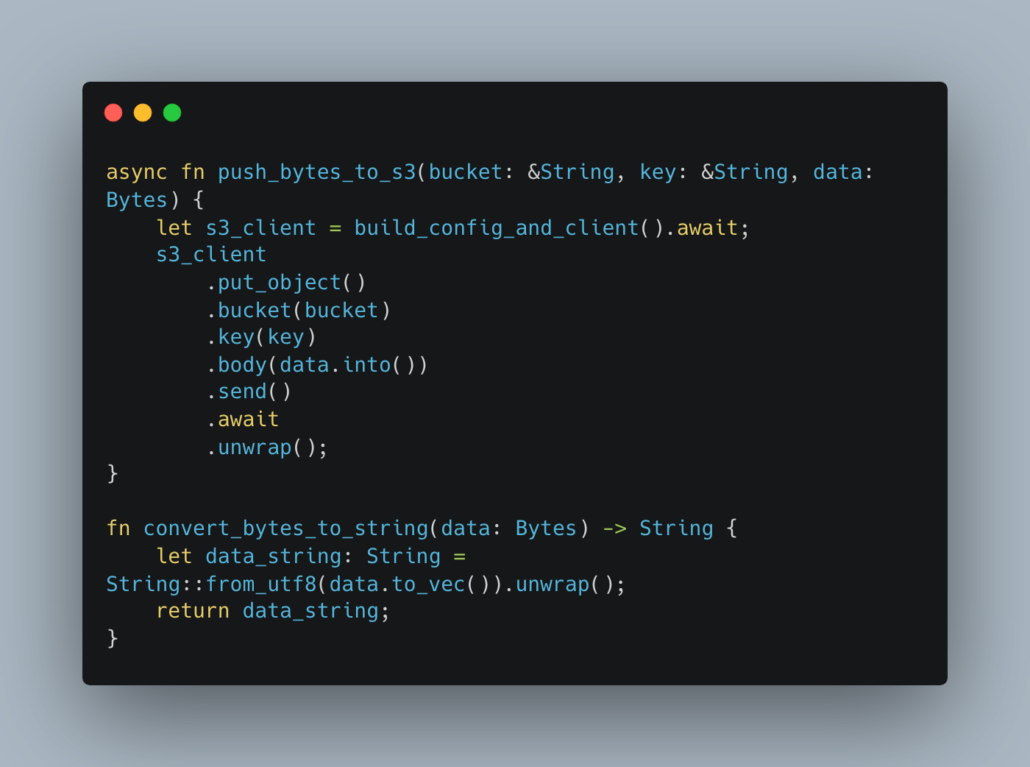

Anyway, here is my (not so) wonderful Rust code to do the work of downloading a file from s3, converting it from delimited to tab, and shoving that resulting data back to s3.

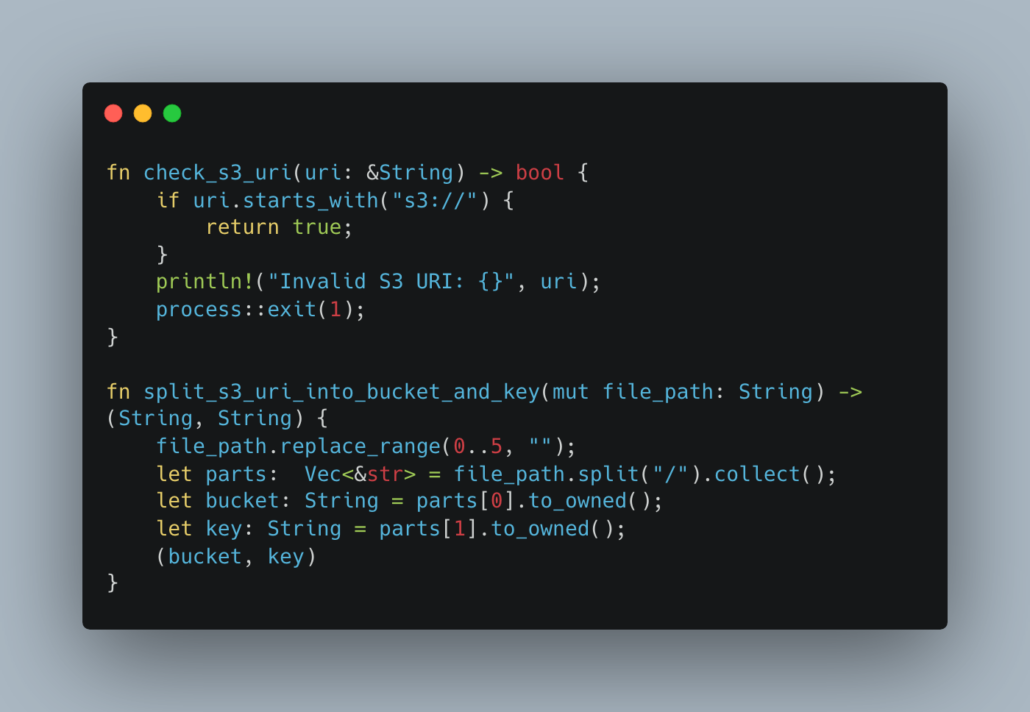

And the functions for the above.

Son of a gun … that’s a lot of Rust. It better be worth it. Of course, we have to build this Rust into a binary. Luckily cargo is a beast.

If you’ve never had the pleasure, or misfortune, to cross-build a Rust binary on Mac for a Linux (Airflow) platform … these are the steps I followed to get the binary. https://hackernoon.com/cross-compiling-rust-on-macos-to-run-as-a-unikernel-ff1w3ypi

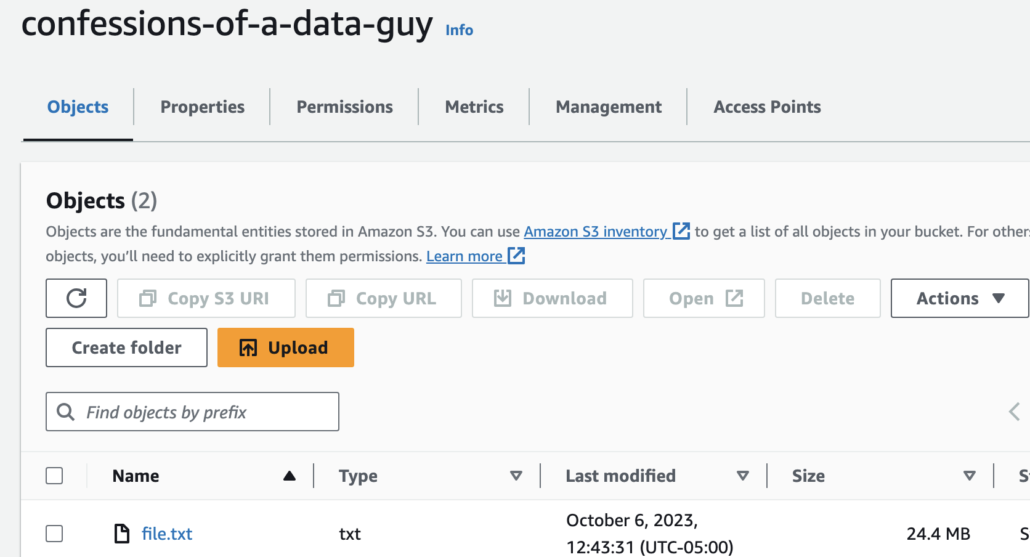

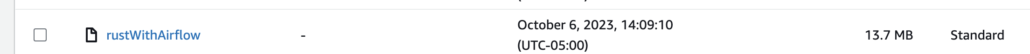

There is my binary sitting in s3 … just waiting to be picked up.

Airflow Time.

Well … I guess we are half done. Now comes the part of making this work with Airflow. For our purposes, we will be using the Astro CLI to help us spin up a local Airflow environment for testing our idea.

If you are unfamiliar with using the Astro CLI for locally running Airfow, do some more reading on your time you lazy bum.

Luckily I have a bash file to help myself with launching a local Airflow environment and get whatever I need for requirements setup.

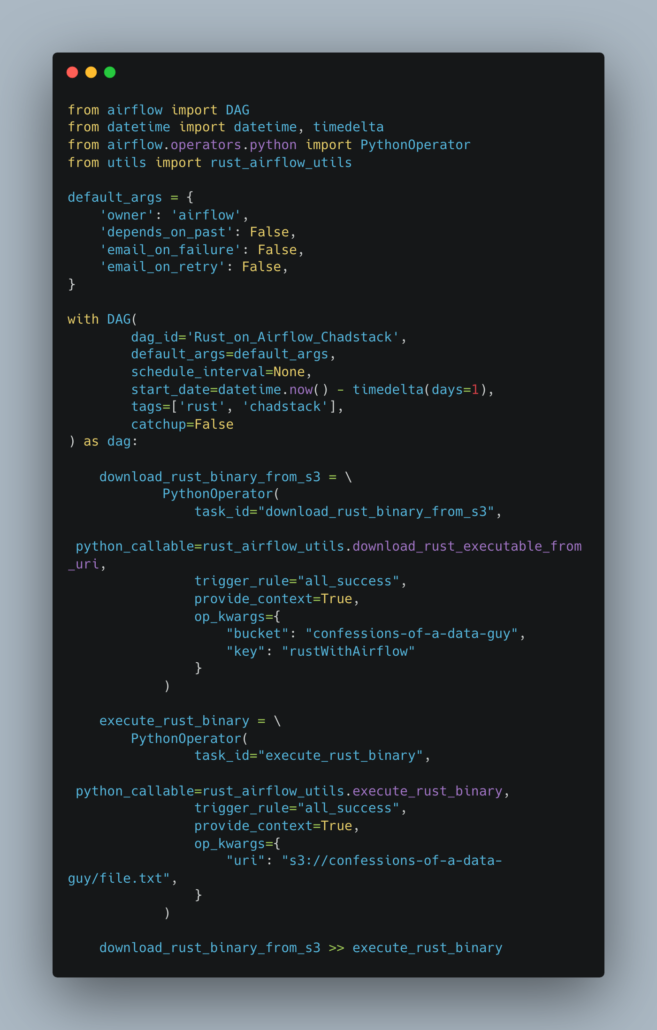

Well … now I suppose we need a DAG in Airflow to do this Frankenstein work. Here you be you little hobbit.

Our DAG is “simple,” it has two PythonOperators, one which will download our Rust binary file from s3, and the next will actually execute that binary on the Airflow worker passing an s3 file for transformation as an argument.

- Download Rust binary from s3 onto the local Airflow worker.

- Trigger Rust binary with s3 file argument.

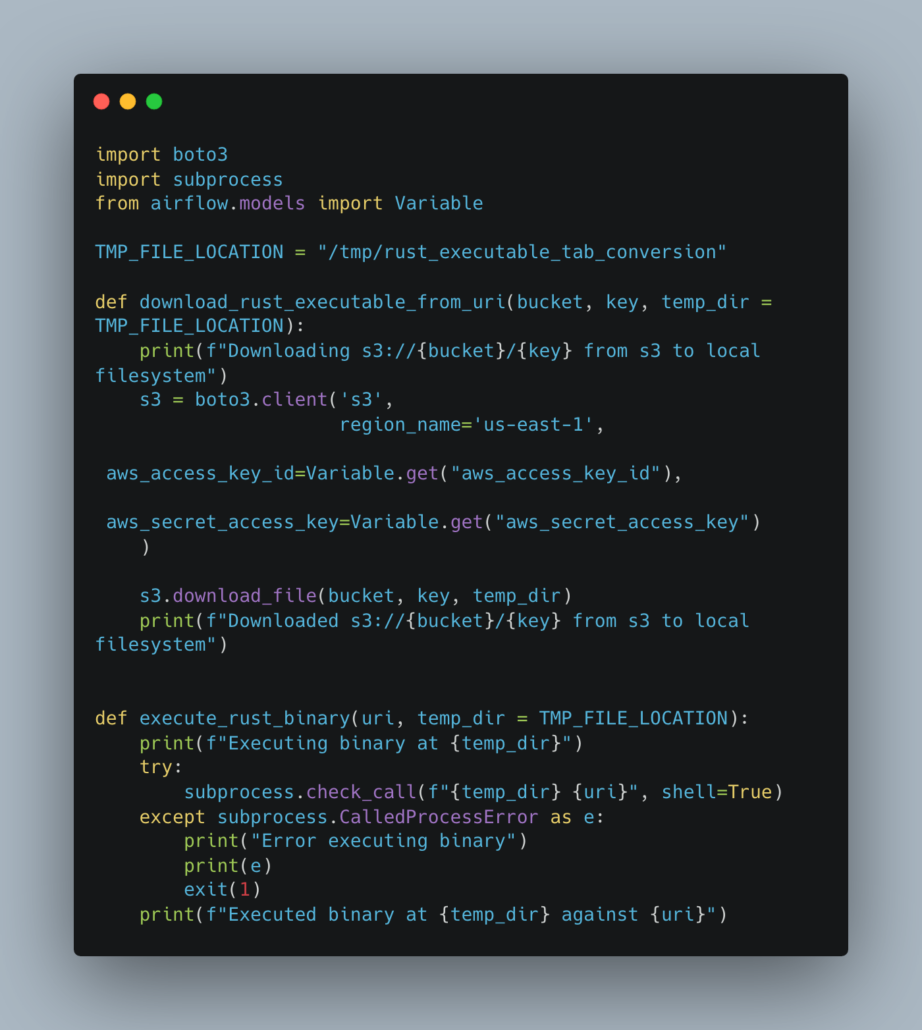

Here is the Python Operator code.

Not bad!

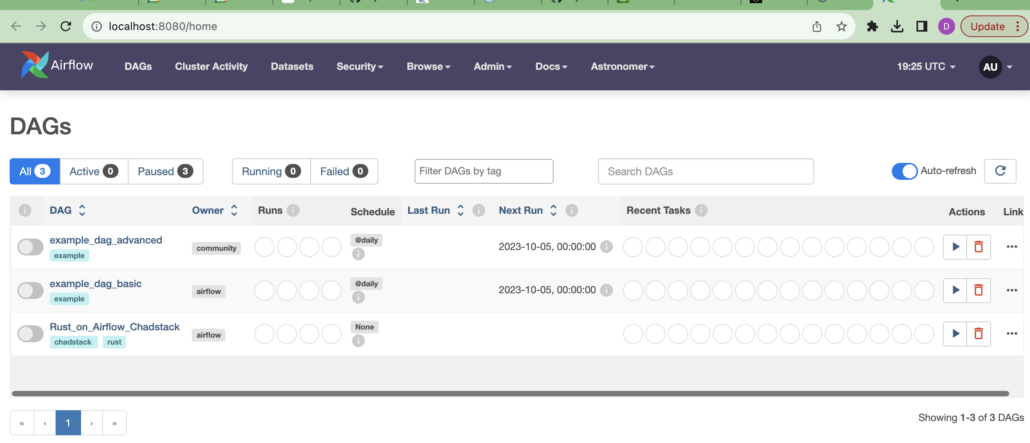

After I ran my Astro CLI bash script to copy my crud over, set environment variables, and the like, I had a nice UI with my DAG.

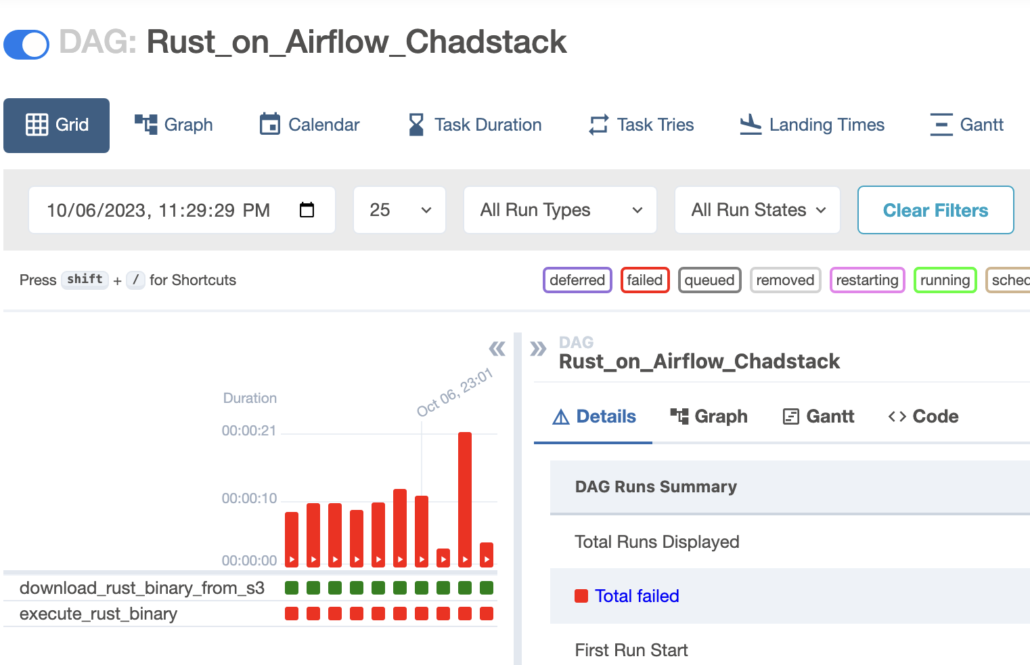

After a few runs and errors, getting AWS credentials into the environment correctly, and running through many iterations … as you can see below … I got to the point where the first task was passing … aka downloading the Rust binary from s3 and trying to trigger it.

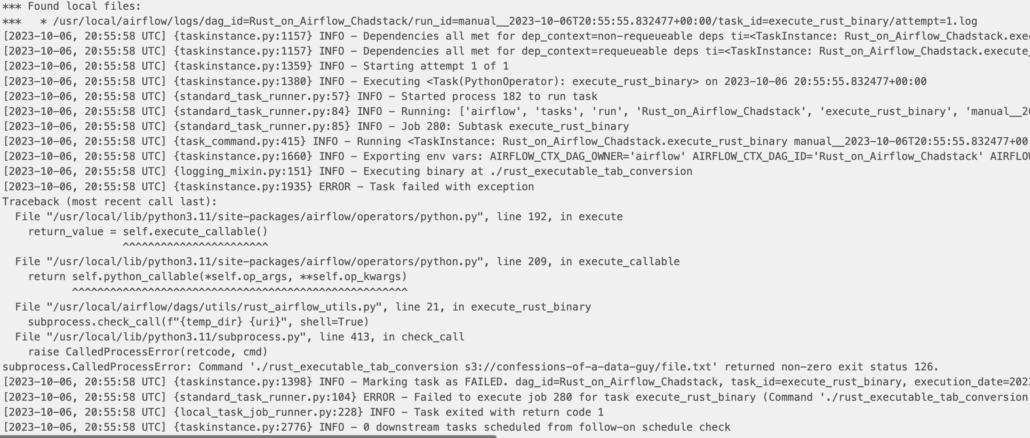

Here you can see in the logs the Rust binary is downloaded and the next task attempts to trigger it with the correct s3 file command. But this is where it errors, it doesn’t like the command.

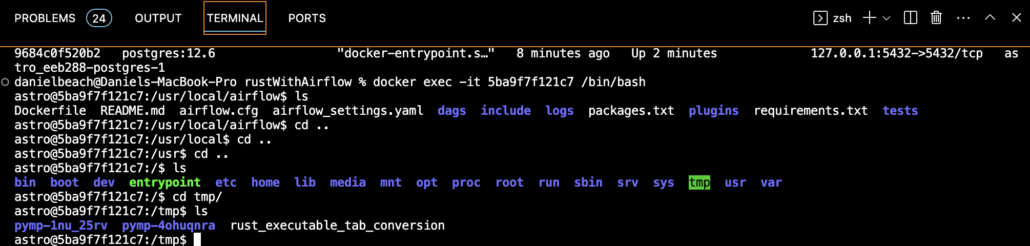

I even used some Docker commands to drop into the Airflow scheduler container and actually look at the Rust binary that was downloaded. It exists, confirming the first half the DAG is working fine.

You can see this above. There is an executable. After researching the exit status 126 thrown back by the PythonOperator I decided it was a permissions issue. (since the Rust binary worked fine locally).

I did a simple chmod a+x rust_executable_tab_conversion to ensure the proper execute permissions were available on the file. I then re-ran the failed task a few times over. Still no luck This time a 101 error.

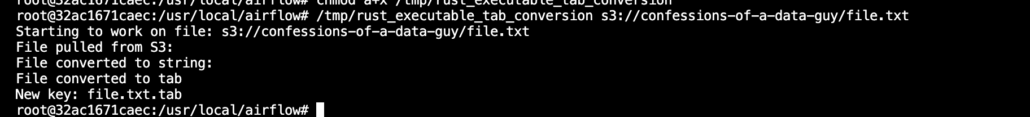

To prove my idea was not completely crazy I just executed the command printed into the Airflow logs while logged into the Scheduler containing the Rust binary.

It worked like a charm!!! From the Airflow worker the Rust binary executed and did the work on the file and pushed it out to s3!!!

But why won’t the Airflow worker run the same command through the DAG that I can do from within that worker’s command line myself?

I don’t know. It probably has something to do with permissions, even though it’s clear the Worker is finding the binary and trying to execute it … it’s probably running under another Linux user maybe? Although I thought my chmod execution command for all users would have fixed that.

Conclusion.

Even though I was shot through the leg on this project with one foot over the finish line … I’m sticking to my guns. Rust + Apache Airflow is going to be the new Chadstack.

We’ve proved it’s possible with little effort to write Rust programs that we can build for Linux, and get them onto an Airflow worker. Of course, I had to manually slip into the backdoor of the Airflow worker to get the R binary to execute … but I’m sure there are smart people on the internet who know what one little tweak I have to make to fix that bug.

Ensure to comment below if you know the problem.

All code is on GitHub.