Python Async File Operations – Juice Worth the Squeeze?

What I’ve greatly feared has come to pass. I’ve come to love on of the most confusing parts of Python. AysncIO. It has this incredible ability for data engineers building pipelines in Python to take out so much wasted IO time. It saves money. It’s faster. People think you’re smarter than you are. Tutorials are one thing but implementing it in your complex code is typically mind bending and a test of your patience and self-worth.

Below is an affiliate link, I receive compensation if you buy 🙂

In my world I’ve mostly used Async to cut down on HTTP wait times when downloading files or other such nonsense. I’ve occasionally used it in my own custom async functions. It recently dawned on me I spend a lot of time messing around with files as a data engineer. That is when I’m not using BytesIO. The downside to async is that it’s always confusing, no matter how many times you’ve done it. So, is Async file operations juice really worth the squeeze? Time to find out.

I’m using a 3gB data set from Kaggle. There are about 50 csv files included, so this should be enough to mess around with. So the plan is to really just read all the files, with and without async, and see the clock differences. I don’t care about processing the files right now. In my head I just keep questioning if all the pain of Async is really worth it for file operations.

Let’s take a crack at the normal synchronous version.

import glob

import time

def open_file(file: str):

with open(file, mode='r') as f:

content = f.read()

return len(content)

def run():

contents = []

files = glob.iglob('sofia-air-quality-dataset/*.csv')

for file in files:

content = open_file(file)

contents.append(content)

return contents

t1 = time.time()

responses = run()

for response in responses:

print(response)

t2 = time.time()

print('It took this many seconds '+str(t2-t1))

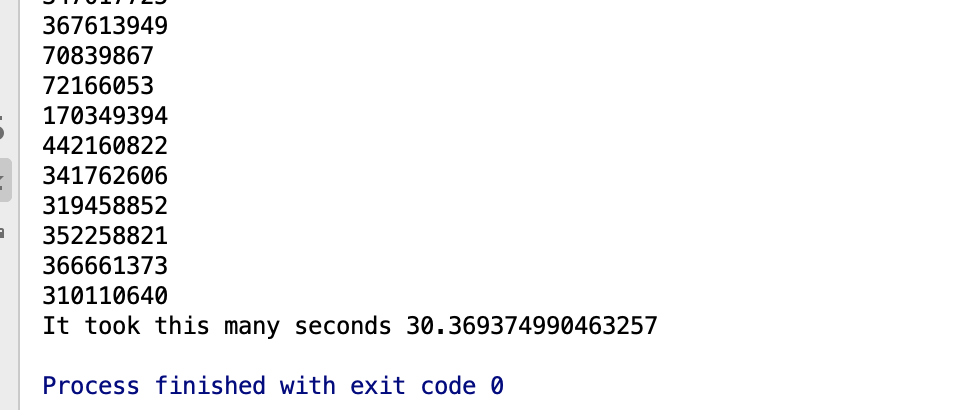

So, around 30 seconds to read all the files and get the length of the contents. Now let’s try async.

import aiofiles

import asyncio

import glob

import time

async def open_file(file: str):

async with aiofiles.open(file, mode='r') as f:

content = await f.read()

return len(content)

async def run():

tasks = []

files = glob.iglob('sofia-air-quality-dataset/*.csv')

for file in files:

task = asyncio.ensure_future(open_file(file))

tasks.append(task)

contents = await asyncio.gather(*tasks)

return contents

t1 = time.time()

loop = asyncio.get_event_loop()

future = asyncio.ensure_future(run())

responses = loop.run_until_complete(future)

for response in responses:

print(response)

t2 = time.time()

print('It took this many seconds '+str(t2-t1))

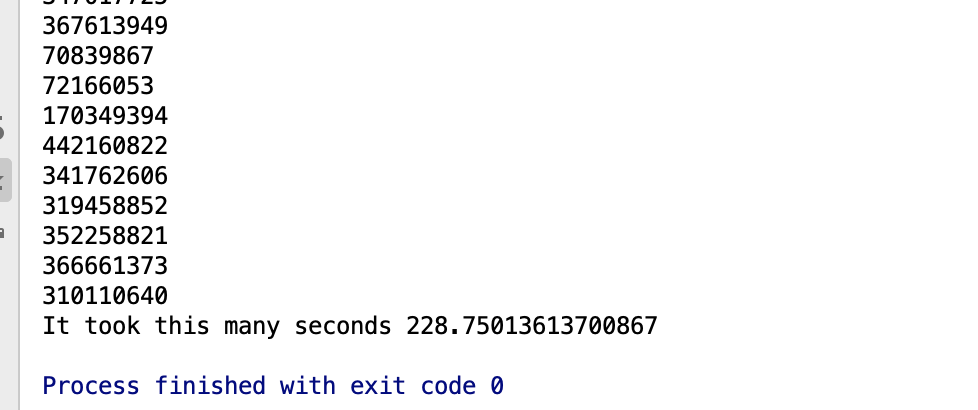

Well, that’s a pieces of junk. Almost 300 seconds, wow. I’m pretty much an Async novice, but I’m fairly certain I did that correctly. Apparently I’m not the only one who’s found that async file operations are slower.

This does make a little sense. The whole point of async is to release control of execution while waiting on some blocking piece of code. Opening and closing files is not really blocking. Reading a file may be blocking, but that work of reading a file still must be done. So the juice is not worth the squeeze on aiofiles, far from it.

My guess is there might be edge cases where async file operations might come in handy when working in a large async application, although I don’t know what that might be.