Hive Metastore in Databricks – What To Know.

Hive is like the zombie apocalypse of the Big Data world, it can’t be killed, it keeps coming back. More specifically the lesser-known Hive Metastore is the little sneaker that has wormed its way into a lot of Big Data tooling and platforms, in a quasi behind the scenes way. Many people don’t realize it, but Hive Metastore is the beating heart behind many systems, including Databricks. It’s one of those topics that sneaks up on you, ignore it happily at your own peril, till all of a sudden you need to know everything about it.

Specifically, I want to talk about Hive MetaStore as related to Databricks, how it works inside the Databricks platform, and what you need to know. I tripped myself up a lot during my initial forays into Databricks at a Production level. When you wander outside the realm of Notebooks, which you should, strange things start to happen. Databricks seems to assume you already have your own Hive Metastore, maybe like the Glue Data Catalog, or that you want to set up your own somewhere. But what if you don’t?

What is Hive Metastore?

Not going to spend a lot of time here. There is plenty of material out there on the Hive Metastore and all its gory details.

At a very high level, the classic Metastore for Hive was and is most commonly a relational database (RDBMS) that stores “metadata” about data sources used in tools like Hive or Spark.

One of the biggest challenges of Big Data technologies running in a distributed manner on large clusters, in conjunction with abnormally large datasets stored in file-based systems, is keeping a centralized tracking of this “data about the data” so that a tool like Spark on an ephemeral cluster on AWS knows where the heck that “table” of data is.

That is where the Hive Metastore comes into play.

Hive Metastore is the keeper of the keys, the little dude in the background that makes your life easier when you don’t even know it. The problem is, like with Databricks, they give it to you for free, you don’t know about it, there is minimal in-depth documentation surrounding its actual importance. This can lead to headaches. Let’s dive in.

Databricks Hive Metastore – What you need to know.

Hive Metastore isn’t all that complicated in Databricks, but it’s not obvious if you aren’t told the important role it plays and what your total options are.

The basics.

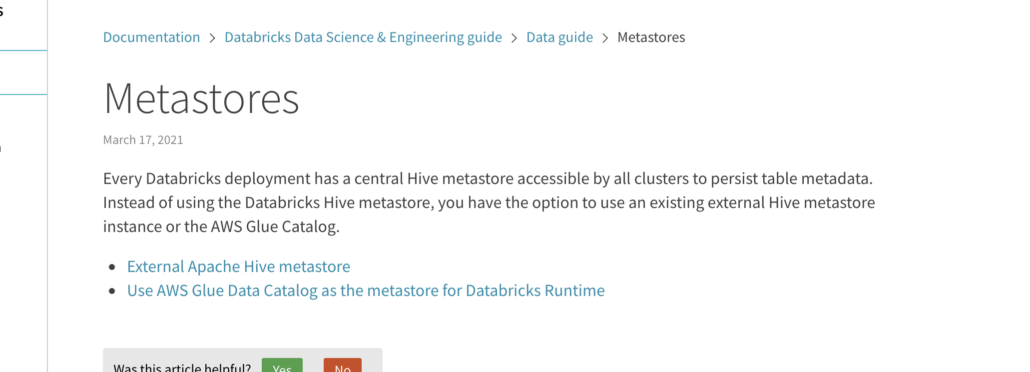

What happens if you Google “databricks hive metastore”? This is the page you get to understand what’s going on.

Yup, that’s the extent of it. I mean a little more effort would be nice.

Ok, so high level it really breaks down in a few ways.

- Databricks provided “default” Hive Metastore

- External Hive Metastore

Yup, those are your options. So what’s the big deal? Well, it gets slightly confusing if you are not an old school Data Engineer who has messed with your own Hive Metastore in the past, or if you are like me and the closest you ever got was using Glue’s catalog, which is so far obfuscated that you don’t even realize your working with a Hive Metastore.

The Databricks Hive Metastore Confusion

So this is where it got confusing for me in the beginning. If you’re new to Databricks and you’re playing around with a Notebook, that’s the most obvious place to start, you write some SQL and create a Delta Table for example.

Some magic happens, those Delta tables you created are accessible via any Notebook and all-purpose-cluster you create. If you write

CREATE TABLE hobbits

(hobbit_id STRING,

....

...)

USING DELTA

PARTITIONED BY (middle_earth, shire);Then a few desks away, your buddy can at any time write …

%sql

SELECT *

FROM hobbits;Well, how nice and convenient, thank you Hive Metastore, you truly make our lives easier.

In a universe many parsecs away, some lightyears later your boss decides its time “productionize” all this Spark and warehouse code. No problem you say, there is the perfect solution for this.

The Databricks Job API and Clusters. Job clusters and API and probably one of the best inventions of Databricks, it truly brings automation and complex workflows to Big Data Spark pipelines.

We can talk about that more later.

Databricks Job API and Cluster Hive Metastore problem.

So what happens if you are happily going along, using Airflow or something else to send jobs to the Databricks Jobs API, jobs that depend on Delta Tables, either getting them or writing them. You reference that Delta Table just like you do in all your Notebooks all day long. Except you run into a problem.

Spark just dies saying it can’t find your Delta Table(s).

Cripes you think, why can’t I access my Delta Tables, I can do it all day long with my Notebooks? It’s because of the default Hive Metastore.

It might take you a little bit to realize this, it did for me. Sometimes the obvious things are hard to see. There isn’t really any good documentation explaining this upfront to you in the Databricks documentation. But, you will eventually come to the conclusion that your Databricks Jobs is having trouble accessing the default Hive Metastore.

This is confusing because one of the two pages they have about Hive Metastore …

“Every Databricks deployment has a central Hive metastore accessible by all clusters to persist table metadata. Instead of using the Databricks Hive metastore, you have the option to use an existing external Hive metastore instance or the AWS Glue Catalog.”

– Databricks Documentation

Well … apparently NOT every Databricks deployment … aka Jobs.

Stinkers.

Using Default Hive Metastore on your Databricks JOBS.

Eventually, after a day of Googling, like me, you will realize that you are either going to have to set up your own External Hive Metastore, or figure out a way to find out how to make the Databricks Jobs clusters find the default provided Databricks Hive Metastore.

It breaks down two a few simple steps that are not easy to find, but here they are. My pain is your gain. Thank the good Lord for this Medium article.

- In UI go to Admin console, make sure “Web Terminal” is enabled.

- Start a cluster, go to Apps, then Terminal … then type

cat /databricks/hive/conf/hive-site.xml

A bunch of JBDC connection information will come up, you need to copy this for later use.

You will use these values for json configuration info when setting up your Databricks Jobs API. Like as follows.

"spark.hadoop.javax.jdo.option.ConnectionURL": f"{HIVE_METASTORE_URI}",

"spark.hadoop.javax.jdo.option.ConnectionUserName": f"{HIVE_METASTORE_USER}",

"spark.hadoop.javax.jdo.option.ConnectionPassword": f"{HIVE_METASTORE_PASS}",

"spark.hadoop.javax.jdo.option.ConnectionDriverName": f"{HIVE_METASTORE_DRIVER}",

"spark.sql.hive.metastore.version": "0.13.0",This json will be part of the 'spark_conf': SPARK_CONF that gets submitted to the JOBS API, by whatever tool your using, Airflow or otherwise.

The above connection options will now allow your Databricks Job Clusters to connect to the default Hive Metastore provided by Databricks. This is a much better option than going about the task of setting up an external Hive Metastore, yet another system for you to manage.

Musings

Databricks is the best Data Lake and Big Data processing system out there right now. They make everything so easy, their integration with Delta Lake, Databricks SQL, Notebooks, and the like give it the power of a juggernaut to take on every challenge imaginable. They almost made it too good and too easy.

The problem with being so good and easy to use, is that if you miss a spot and let confusion and obfuscation enter, it’s going to be more frustrating for your users.

The Hive Metastore is critical to how well your production Databricks Jobs run. They need better high-level documentation that explains that and explains the use and configuration of Databricks Jobs to use the default provided Metastore. Better yet, make it seamless like the Notebooks!

Heya, databricks engineer here with quite a lot of experience in this area of our product.

Your metastore should work within jobs just like it does in notebooks.

Can you shoot me an email at evan at databricks dot com with some details of your setup? I’d like to drill into this a bit more. Thanks!