I’m still amazed to this day how many folks hold onto stuff they love, they just can’t let it go. I get it, sorta, I’m the same way. There are reasons why people do the things they do, even if they are hard for us to understand. It blows my mind when I see something on r/dataengineering that people are still using SSIS for ETL to this day.

I guess it says something about a piece of technology, whatever it is when it refuses to roll over and die. There’s something to that software.

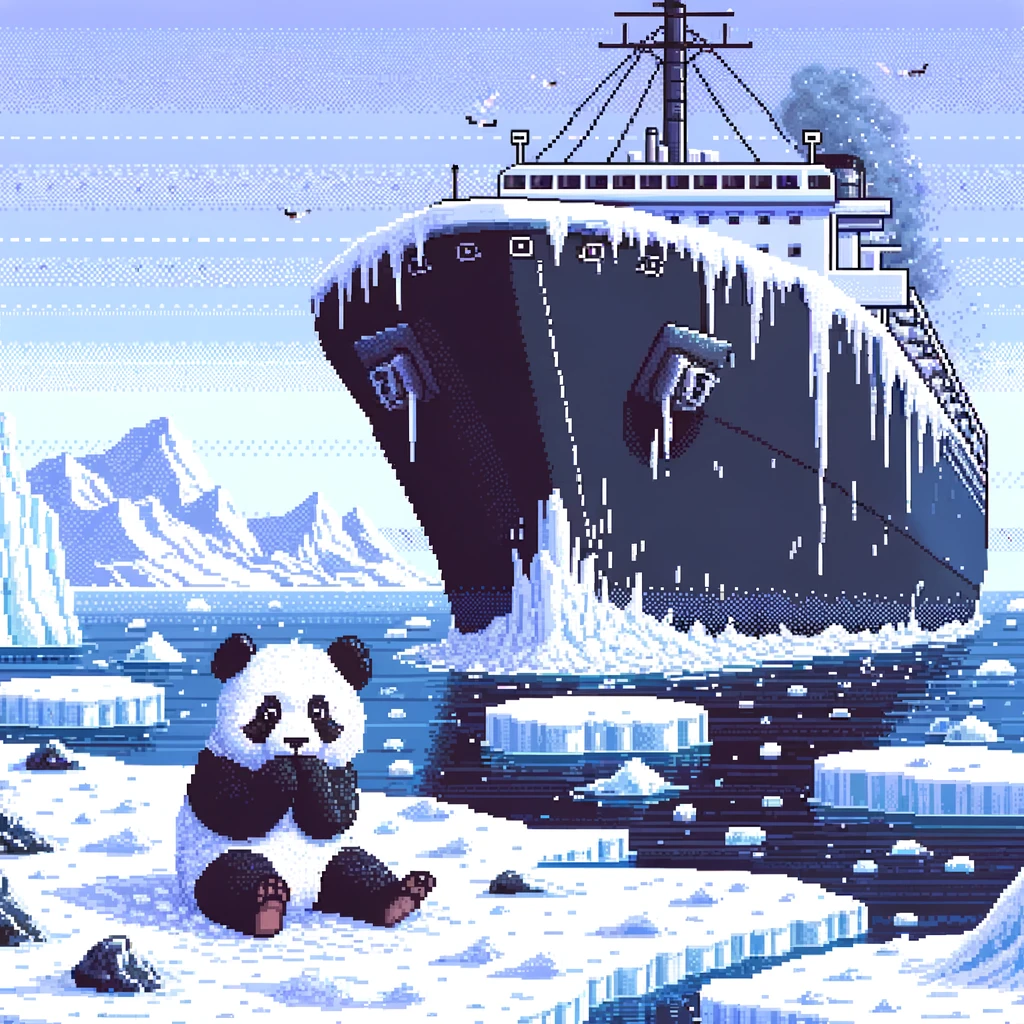

Yet, even though we may take off our hats and shake the hand of Pandas, it’s the overlord of all Python Data Folk, it still doesn’t mean we can’t move on. Show respect where respect is due, but push those able to move on, to move on into the future. When the future arrives you should consider making the switch.

Replacing Pandas with Polars.

In case you need a more detailed look into how you can actually replace Pandas with Polars, read more here. Really today it’s just a philosophical bunch of thoughts about the reasons behind why people should move on from Pandas to Polars, talk about why they don’t, and how to overcome those things.

Why replace?

Let’s just list some reasons.

- Pandas is slow

- Pandas can’t work on OOM datasets

- Pandas can be cumbersome

- Pandas don’t have SQL interface

- Polars has SQL interface

- Polars is based on Rust (the newest and coolest thing)

- Polars can do what Pandas can do, better.

- Other tools and general features will be more focused on Polars in the future

- At some point, we have to accept that new things are simply better than the old thing

I mean we could go on forever.

What are the reasons people will fail to make the switch from Pandas to Polars (some of these are symptoms of other problems)?

- The claim that Pandas is too entrenched in the codebase

- Unable to dedicate the time to switch

- Afraid of the unfamiliar

- A culture that is unable to learn new tools

- Claim some minor “thing” they do with Pandas can’t be done in Polars (miss the forest for the trees)

I think it’s more of a cultural thing when it comes to folk who don’t want to switch from Pandas to Polars. It’s probably a business that doesn’t like change, doesn’t deal with tech debt, doesn’t focus enough on Engineering improvements, where the status quo is never challenged.

I’m saying that there aren’t serious hurdles when replacing pieces of technology in any stack, but that doesn’t make it a non-starter. Most good things for us are hard, in life and Engineering. We want stability in our stack, yes, but we don’t want to be the people who are 15 years behind the changes either, we have to strike a balance.

Sometimes things come along like Polars, or Spark, or Snowflake, whatever … we can tell they are here to stay after a year or two. It’s clear the Data Engineering community and Platforms are moving in a direction, so why not move with it? No reason to stay behind and languish.

![]()