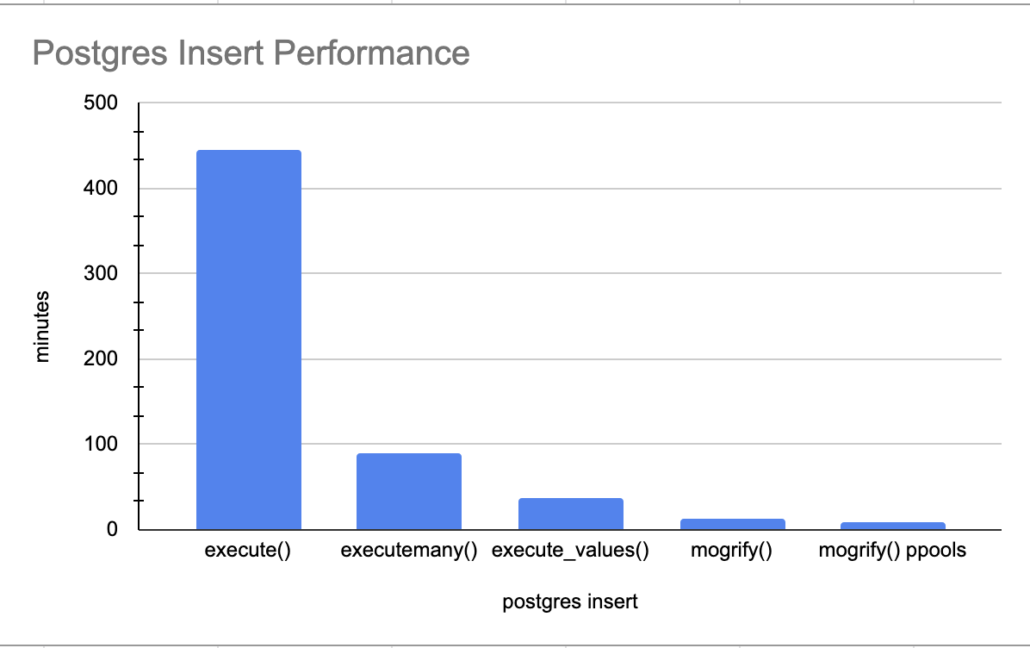

Is there any problem more classic to the Data Lakes and Data Warehouses than duplicate records? You would think after doing the same ETL for over a decade I could avoid the issue, apparently not. It’s good never to think too highly of one’s self, the duplicates can get us all. Today I want to talk about a wonderful feature of Databricks + Delta Lake MERGE statements that are perfect for quietly and insidiously injecting duplicates into your Data Warehouse or Data Lake. This is a great trick to play on your unsuspecting coworkers.