Why Data Engineer’s should use AWS Lambda Functions.

When I used to think of lambda functions on AWS my eyes would glaze over, I would roll my eyes and say, “I work with big data, what in the world can a silly little AWS lambda function offer me?” I’ve had to eat my own words, those little suckers come in handy in my day to day engineering work. I want to talk about how every data engineer working with AWS can take advantage of lambda’s and add them to their data pipeline tool belt.

The Conundrum of AWS Lambda functions and Big Data.

You know, on the surface for a long time I just couldn’t see how an AWS Lambda function could be useful to me or not. I think they max out at like 10GB of memory, use to be less, the time out after 15 minutes, used to be less. It just didn’t make a whole lotta sense how I could use AWS lambda functions in my big data life.

AWS Lambda’s are ….

- small and compact code

- runs quickly

- small resources (memory and cpu)

Big Data is usually about ….

- complex large codebase

- runs forever

- cluster compute

How to use AWS Lambda’s in Big Data

So I did have an epiphany in all my data engineering journeys when it came to using AWS lambda’s in my big data pipelines. And, It kinda solved two problems at once. What are two topics that give data engineer’s headaches because they are critical to our work, but very ambiguous at the same time?

- data quality and control.

- architecture complexity.

These two small items are always hard nuts to crack. They usually are ignored and then come back to bite you in the behind. There are a few options that I’ve found have been used to deal with these problems.

Many times data quality checks are written directly into the ETL pipelines. This can be good and bad, I don’t like that approach because it ….

- makes the ETL pipeline code more bloated and harder to debug.

- mixing processes, making the complexity rise.

- buying third party Data Quality tools, which costs more, increasing complexity and architecture overhead.

AWS Lambda … easy data quality checks.

This is where an AWS lambda can come save the day. And it doesn’t get much easier when it comes to simplicity and easy architecture. The approach is very simple, especially when using cloud storage like s3. Here are the simple steps.

- fire lambda on s3 file creation (when a file hits a bucket(s).

- open file(s), check headers and rows.

- get basic file stats and metrics and store (optional)

- if something is wrong, move file to quarantine and report error.

One of the best parts about this approach is early warning. Instead of waiting until the batch ETL runs and is trying to load data into your data lake or data warehouse, and finding out something when wrong with a file upstream …. find out the minute that data file comes into your domain.

Introduction to AWS Lambda for data engineers

There are only a few things you need to know to write some simple lambda function(s) that can start doing all the data quality hard work for you.

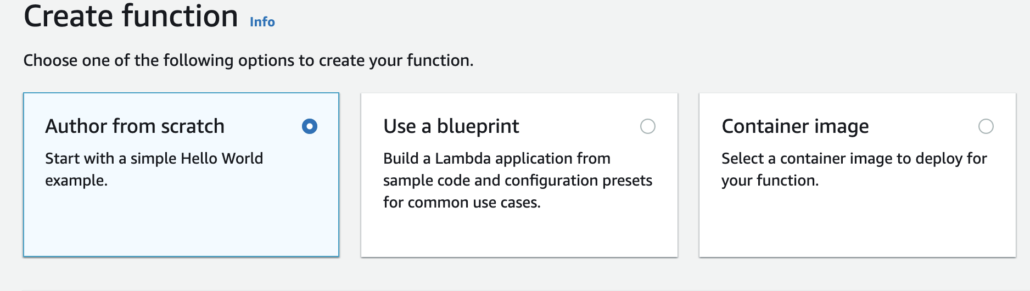

First, AWS Lambda’s can take the form of ….

- codebase (for example

.pyfile) Dockerimage

What this means is that you can either package your lambda code inside a Docker image …. or just write a file and upload that.

I recommend the Docker image route because it’s easy and AWS provides pre-build Lambda images for most languages. For example, for doing data quality checks on files, the AWS provided Python Lambda Docker image is a great place to start.

Here is how easy it is to build ontop of the Python image provided by AWS…

FROM public.ecr.aws/lambda/python:3.8

COPY . ./

RUN pip3 install requirements.txt

CMD ["my_lambda.lambda_handler"]How do I write code for my AWS lambda function?

The same way you write any other code. They only difference that you “tell” your lambda function what the entry-point will be. For example, when writing a Python lambda function you might write a bunch of code and place it in my_lambda.py , and be default the lambda will always look for a lambda_handler definition as the entry point when a lambda is triggered.

When you have a trigger on a lambda function, and your lambda looks in your code for lambda_handler, there are a few default variables that are passed to this function, event and context.

def lambda_handler(event, context):

for record in event['Records']:

try:

bucket = record['s3']['bucket']['name']

key = unquote_plus(record['s3']['object']['key'])

..... more codeYou can see in the above example iterating through the records that were sent (s3 file creation events) that were sent to the lambda function, and it’s easy to grab the bucket and the key (file_uri) for that file just created.

And once you have this information that sky is the limit with Python! Most data engineers have used boto3 or the aws cli , so being able to get to the file that was just created and testing it is now easy peasy.

I won’t write all the code here but as an example, using `boto3` it’s easy to download the file just created.

import boto3

def lambda_handler(event, context):

for record in event['Records']:

try:

bucket = record['s3']['bucket']['name']

key = unquote_plus(record['s3']['object']['key'])

s3 = boto3.client('s3')

s3.download_file(bucket, key, 'my_local_file_name.csv')How to validate data quality with lambda functions.

So now we have an easy lambda function that can download ( or stream ) file’s just created on an s3 bucket, what is the simplest way to enforce some data quality?

- check the file can actually be opened as expected (were you getting

csvand nowparquetfiles showed up?) - check headers are intact and have not changed (people love changing source files without telling you)

- read a row or two of data (ensure data types have not changed etc.)

For example, stream the first few records, including headers from a csv file…..

import csv

def stream_csv_file(self, delimiter: str = '\t', quotechar: str = None, newline: str = None):

csv_file = open(self.download_path, newline=newline)

c_reader = csv.reader(csv_file, delimiter=delimiter, quotechar=quotechar)

for row in c_reader:

yield row

def process_account_file(self):

i = 0

rows = self.stream_csv_file(compressed=True, delimiter=',')

for row in rows:

if i == 0:

assert row == ['column_1', 'column_2', 'column_3']

i += 1

continueHow easy is that? Next you just add some Slack notification message etc, because of course as new files hit your cloud storage, the lambda will be triggered and the file(s) first few rows, headers, etc will be validated.

All this code is not tied to your normal ETL processes, you get notified if a file goes bad hopefully before your actual pipeline(s) run. No surprises!

Also, the fact that this code is logically and physically separated from the other ETL pipeline code and transformations, makes both sets of code easier to understand and manage. Both pieces are not complex, but when you start to combine normal transformation code along with this type of logic, things can get a little fuzzy and confusing.

Musings on AWS lambda functions in the “Big Data” world.

I think that most people don’t think about lambda functions when it comes to big data, or data pipelines, they just don’t make sense for normal processing because of the compute and resource requirements.

I’ve also noticed over the years how data quality and validation is always a source of frustration and pipeline breakage. Source files always change. This is where AWS lambda functions can provide valuable benefits. If you get csv, tsv, txt, parquet files, it doesn’t really matter. Changes to those files and data can be frustrating and hard to catch.

The pre-built AWS provided Python images are a no brainer. They provide a solid base that you can pip install whatever packages you need and open and process pretty much any file normally found in a ETL pipeline. Streaming a few records from that file and validating them is easy easy, and now you can find out ahead of time when something has changed.