Running dbt on Databricks has never been easier. The integration between dbtcore and Databricks could not be more simple to set up and run. Wondering how to approach running dbt models on Databricks with SparkSQL? Watch the tutorial below.

There are things in life that are satisfying—like a clean DAG run, a freshly brewed cup of coffee, or finally deleting 400 lines of YAML. Then there are things that make you question your life choices. Enter: setting up Apache Polaris (incubating) as an Apache Iceberg REST catalog.

Let’s get one thing out of the way—I didn’t want to do this.

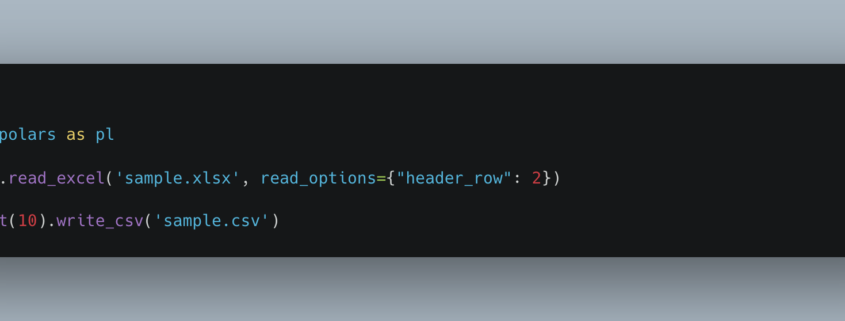

I make it my duty in life to never have to open an Excel file (xlsx); I feel like if I do, then I made a critical error in my career trajectory. But, I recently had no choice but to open an Excel on a Mac (or try) to look at some sample data from a client.

Context and Motivation

- dbt (Data Build Tool): A popular open-source framework that organizes SQL transformations in a modular, version-controlled, and testable way.

- Databricks: A platform that unifies data engineering and data science pipelines, typically with Spark (PySpark, Scala) or SparkSQL.

The post explores whether a Databricks environment—often used for Lakehouse architectures—benefits from dbt, especially if a team heavily uses SQL-based transformations.

The blog post reviews an Apache Incubating project called Apache XTable, which aims to provide cross-format interoperability among Delta Lake, Apache Hudi, and Apache Iceberg. Below is a concise breakdown from some time I spend playing around this this new tool and some technical observations:

Interesting links

Here are some interesting links for you! Enjoy your stay :)Pages

Categories

Archive

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- May 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018